In a landmark move toward responsible artificial intelligence (AI) development, the G7 nations—comprising the United States, Canada, France, Germany, Italy, Japan, the United Kingdom, and the European Union—have finalized the International Guiding Principles for Organizations Developing Advanced AI Systems under the Hiroshima AI Process. Announced on October 30, 2023, these principles, alongside a voluntary Code of Conduct, aim to ensure AI technologies are safe, secure, and trustworthy while addressing global concerns like privacy, misinformation, and human rights. This initiative, led by Japan during its 2023 G7 presidency, marks a significant step in shaping a global framework for AI governance, with the United States playing a key role in its development.

What is the Hiroshima AI Process?

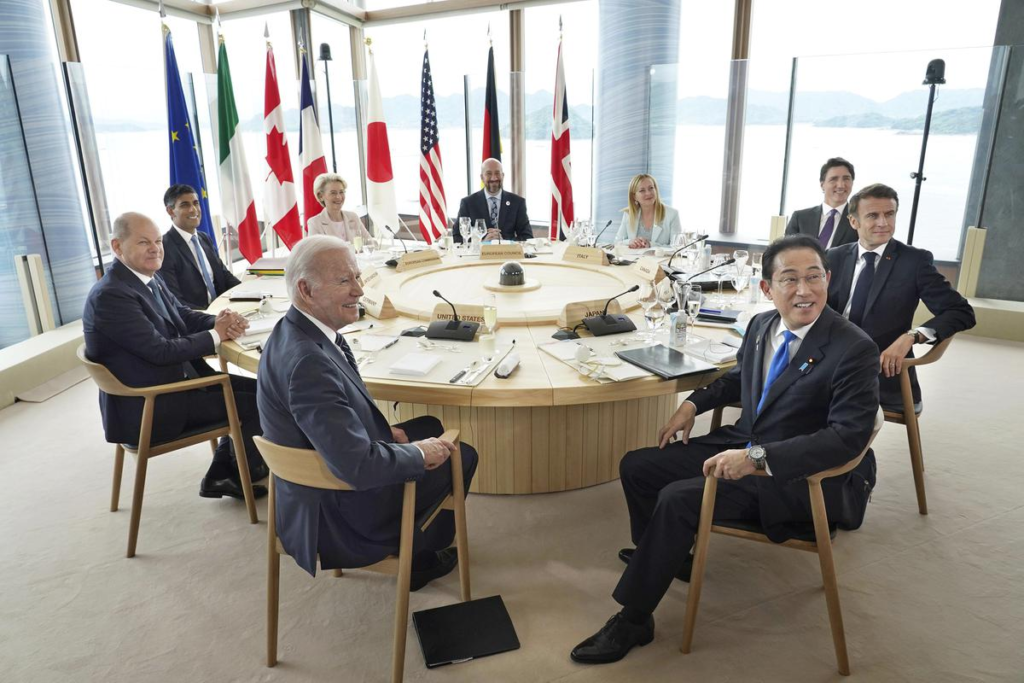

The Hiroshima AI Process was launched at the G7 Summit in Hiroshima, Japan, on May 19, 2023. Its goal was to create a unified approach to managing the opportunities and risks of advanced AI systems, particularly generative AI tools like ChatGPT. The process reflects a growing global awareness of AI’s transformative potential and the need to balance innovation with ethical responsibility. The G7 nations, recognizing AI’s ability to revolutionize industries, healthcare, and communication, also acknowledge its risks, such as spreading disinformation or enabling harmful applications.

The Hiroshima AI Process includes two core components: the International Guiding Principles and a voluntary Code of Conduct. These documents provide a roadmap for organizations developing AI, encouraging responsible practices while respecting democratic values and human rights. The principles build on existing frameworks, like the OECD AI Principles, and are designed to evolve with the rapidly changing AI landscape.

Key Features of the Guiding Principles

The International Guiding Principles consist of 11 key guidelines aimed at organizations involved in designing, developing, deploying, and using advanced AI systems. These principles are not legally binding but serve as a global benchmark for ethical AI development. Some of the standout points include:

- Risk Identification and Mitigation: Organizations are urged to identify and address risks before and after AI deployment, including external testing to ensure safety.

- Transparency and Accountability: Companies must publicly disclose their AI governance and risk management policies to build trust and promote best practices.

- Information Sharing: The principles encourage collaboration across sectors to share knowledge and enhance AI safety.

- Cybersecurity Investments: Developers are advised to prioritize cybersecurity to protect AI systems from misuse, such as facilitating terrorism or disinformation.

- Human Rights Protections: AI systems must align with international human rights laws, ensuring they do not undermine democratic values or pose substantial risks.

These guidelines address critical concerns, such as AI’s potential to lower barriers for non-state actors in developing dangerous technologies, including chemical, biological, or nuclear weapons. By setting these standards, the G7 aims to foster a global environment where AI benefits humanity without compromising safety or ethics.

The Voluntary Code of Conduct

Alongside the Guiding Principles, the G7 introduced a voluntary Code of Conduct to provide practical guidance for AI developers. This code offers detailed steps for implementing the principles, emphasizing a risk-based approach to AI governance. It encourages organizations to conduct regular compliance reporting, facilitate external audits, and monitor AI systems for vulnerabilities post-deployment. The code is designed to be flexible, allowing organizations to adapt it to their specific needs while promoting responsible AI practices worldwide.

The European Commission, a key contributor to the Hiroshima AI Process, has praised the code for reflecting EU values of trustworthy AI. European Commission President Ursula von der Leyen called on AI developers to adopt and implement the code swiftly, noting its compatibility with the EU’s upcoming AI Act, which is set to be the world’s first comprehensive AI regulation.

The United States’ Role in the Hiroshima AI Process

The United States has been a driving force in shaping the Hiroshima AI Process, collaborating closely with other G7 nations and the OECD. U.S. agencies, including the Department of State, Department of Commerce, and the United States Patent and Trademark Office, actively participated in drafting the Guiding Principles. The U.S. also sought public comments on the draft guidelines, ensuring a broad range of perspectives from businesses, academia, and civil society.

The Biden administration has emphasized the importance of global cooperation in AI governance. In a statement from the White House, G7 leaders highlighted the need to balance AI’s innovative potential with safeguards for individuals, society, and democratic values. The U.S. has also pushed for the Hiroshima AI Process Comprehensive Policy Framework, expected to be finalized by the end of 2023, which will include project-based cooperation with organizations like the OECD and the Global Partnership on Artificial Intelligence (GPAI).

The U.S. commitment to the Hiroshima AI Process aligns with its broader efforts to regulate AI domestically. For example, the U.S. has been working on executive actions and legislative proposals to address AI risks, such as those outlined in the White House’s Blueprint for an AI Bill of Rights. By supporting the G7’s principles, the U.S. aims to set a global standard for AI governance while fostering innovation in its tech industry.

Global Impact and Future Steps

The Hiroshima AI Process has gained significant traction, with approximately 49 countries and regions joining the voluntary Hiroshima AI Process Friends Group. This group, which includes nations beyond the G7, such as Singapore and South Korea, is committed to implementing the principles and code to ensure AI’s global benefits. The widespread adoption of these guidelines signals a growing consensus on the need for international cooperation in AI governance.

Looking ahead, the G7 plans to develop monitoring tools and mechanisms to hold organizations accountable for implementing the Code of Conduct. The Comprehensive Policy Framework, set to be completed by the end of 2023, will further refine these efforts through multi-stakeholder consultations and project-based initiatives. The OECD’s pilot of the Hiroshima AI Process reporting framework, conducted between July and September 2024, has already provided valuable feedback to improve the guidelines’ effectiveness.

The Italian G7 Presidency in 2024 has prioritized disseminating and implementing the Code of Conduct, ensuring it reaches organizations worldwide. This ongoing effort underscores the G7’s commitment to creating a dynamic, adaptable framework that evolves with AI advancements.

Why This Matters for the United States

For the United States, the Hiroshima AI Process is a critical step toward maintaining its leadership in AI innovation while addressing ethical and security concerns. As a global tech hub, the U.S. is home to major AI developers like Google, Microsoft, and OpenAI, all of which stand to benefit from clear, consistent guidelines. These principles provide a framework for U.S. companies to align with international standards, fostering trust among consumers and regulators.

Moreover, the principles address pressing issues like disinformation, which has become a significant concern in the U.S., particularly during election cycles. By promoting transparency and accountability, the G7 guidelines encourage AI developers to combat misinformation and protect democratic processes. The emphasis on cybersecurity also aligns with U.S. national security priorities, ensuring AI systems are safeguarded against malicious actors.

Challenges and Criticisms

While the Hiroshima AI Process is a significant achievement, it is not without challenges. The voluntary nature of the Code of Conduct means compliance depends on organizations’ willingness to adopt it, which may lead to inconsistent implementation. Critics also note that the principles, while comprehensive, lack the enforceability of legally binding regulations like the EU’s AI Act. In the U.S., where AI regulation is still evolving, some argue that the G7 principles may not go far enough to address domestic concerns like algorithmic bias or data privacy.

Additionally, harmonizing AI governance across diverse regulatory approaches—such as the EU’s strict legislative framework and the U.S.’s more flexible, innovation-driven approach—remains a challenge. The G7 will need to navigate these differences to ensure the principles remain relevant and effective globally.

Conclusion

The G7’s International Guiding Principles and Code of Conduct under the Hiroshima AI Process represent a pivotal moment in global AI governance. By fostering collaboration among the world’s leading economies, including the United States, the G7 is setting a foundation for safe, secure, and trustworthy AI development. For the U.S., these principles offer an opportunity to lead by example, balancing innovation with responsibility in a rapidly evolving tech landscape. As the G7 continues to refine its framework, the world watches closely, hopeful that these efforts will shape a future where AI benefits all of humanity.

For more information on the Hiroshima AI Process, visit The White House or G7 Hiroshima Leaders’ Communiqué.

For You :- NASA’s Pandora Mission to Explore 39 Exoplanets Starting September 2025